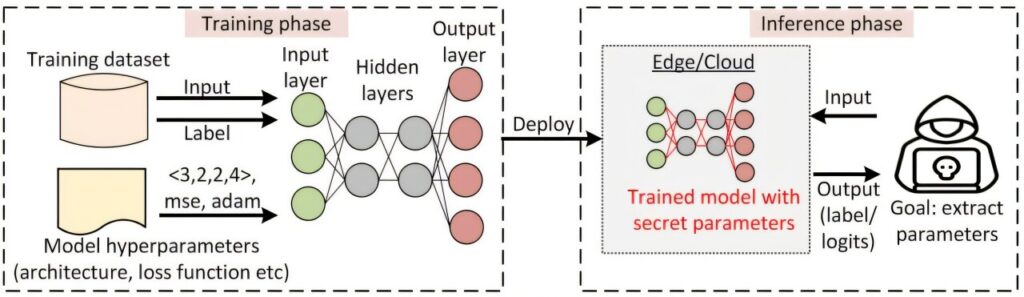

Schematic diagram showing how a deployed neural network becomes vulnerable to attacks. Credit: arXiv (2025). DOI: 10.48550/arxiv.2509.16546

Security researchers have developed the first functional defense mechanism that can protect against “cryptanalysis” attacks used to “steal” model parameters that define how an AI system operates.

“AI systems are valuable intellectual property, and decryption parameter extraction attacks are the most efficient, effective, and accurate way to ‘steal’ that intellectual property. Until now, there was no way to defend against these attacks. Our technology effectively defends against these attacks,” said Dr. Ashley Kurian. student at North Carolina State University and the first author of a paper on the research currently available on the arXiv preprint server.

“Cryptanalysis attacks are already occurring, and they are becoming more and more frequent and efficient,” said corresponding author Aydin Eis, associate professor of electrical and computer engineering at North Carolina State University. “We need to implement defense mechanisms now because it is too late to implement defense mechanisms once the parameters of the AI model have been extracted.”

The problem is a decryption parameter extraction attack. Parameters are important information used to describe AI models. Essentially, parameters are how an AI system performs a task. A cryptographic parameter extraction attack is a purely mathematical method of determining the parameters of a particular AI model, allowing a third party to recreate the AI system.

“In a cryptanalysis attack, someone sends an input and examines the output,” Isu said. “They then use mathematical functions to determine what the parameters are. So far, these attacks have only worked against a type of AI model called a neural network. However, many, if not most, commercial AI systems are neural networks, including large language models such as ChatGPT.”

So how can we defend against mathematical attacks?

The new defense mechanism is based on important insights researchers have gained regarding cryptanalysis parameter extraction attacks. In analyzing these attacks, researchers identified core principles that all attacks rely on. To understand what they learned, you need to understand the basic architecture of neural networks.

The basic building blocks of neural network models are called neurons. Neurons are arranged in layers and are used in sequence to evaluate and respond to input data. Once the data is processed by the neurons in the first layer, the output of that layer is passed to the second layer. This process continues until the data is processed throughout the system, at which point the system decides how to respond to the input data.

“What we observed is that codebreaking attacks focus on the differences between neurons,” Kurian says. “And the more different the neurons, the more effective the attack will be. Our defense mechanism relies on training the neural network model in a way that makes neurons in the same layer of the model similar to each other. This can be done only in the first layer, or it can be done in multiple layers. It can also be done with all neurons in one layer, or just a subset of neurons.”

“This approach creates a ‘wall of similarity’ that makes it difficult for attacks to proceed,” Aysu said. “Fundamentally, this attack has no path forward. However, the model is still functioning properly in terms of its ability to perform its assigned tasks.”

In proof-of-concept testing, the researchers found that the accuracy of the AI model incorporating the defense mechanism changed by less than 1%.

“In some cases, models that were retrained to include defense mechanisms were slightly more accurate, and in other cases they were slightly less accurate, but the overall changes were minimal,” Kurian said.

“We also tested how well the defense mechanisms worked,” Kurian says. “We focused on models that extracted parameters within four hours using cryptanalysis techniques. After retraining to incorporate defense mechanisms, we were unable to extract parameters during cryptanalysis attacks that lasted several days.”

As part of this study, the researchers also developed a theoretical framework that can be used to quantify the probability of success of a cryptanalysis attack.

“This framework is useful because it allows us to estimate how robust a given AI model is against these attacks without having to run them for days,” Aysu says. “There is value in knowing how secure or insecure a system is.”

“We know this mechanism works, and we’re optimistic that people will use it to protect AI systems from these attacks,” Kurian said. “And we are open to working with industry partners interested in implementing this mechanism.”

“We also know that people who try to circumvent security measures will eventually find a way to circumvent them. Hacking and security always go back and forth,” Isu says. We look forward to continuing to find funding sources to help us keep pace with new security initiatives. ”

The paper, “Train to Defend: First Defense Against Cryptanalytic Neural Network Parameter Extraction Attacks,” will be presented at the 39th Annual Conference on Neural Information Processing Systems (NeurIPS), December 2-7 in San Diego, California.

Details: Ashley Kuran et al, Train to Defend: First Defense Against Cryptanalytic Neural Network Parameter Extraction Attacks, arXiv (2025). DOI: 10.48550/arxiv.2509.16546

Magazine information: arXiv

Provided by North Carolina State University

Source: Researchers announce first defense against cryptoanalytic attacks against AI (November 17, 2025) Retrieved November 17, 2025 from https://techxplore.com/news/2025-11-unveil-defense-cryptanalytic-ai.html

This document is subject to copyright. No part may be reproduced without written permission, except in fair dealing for personal study or research purposes. Content is provided for informational purposes only.