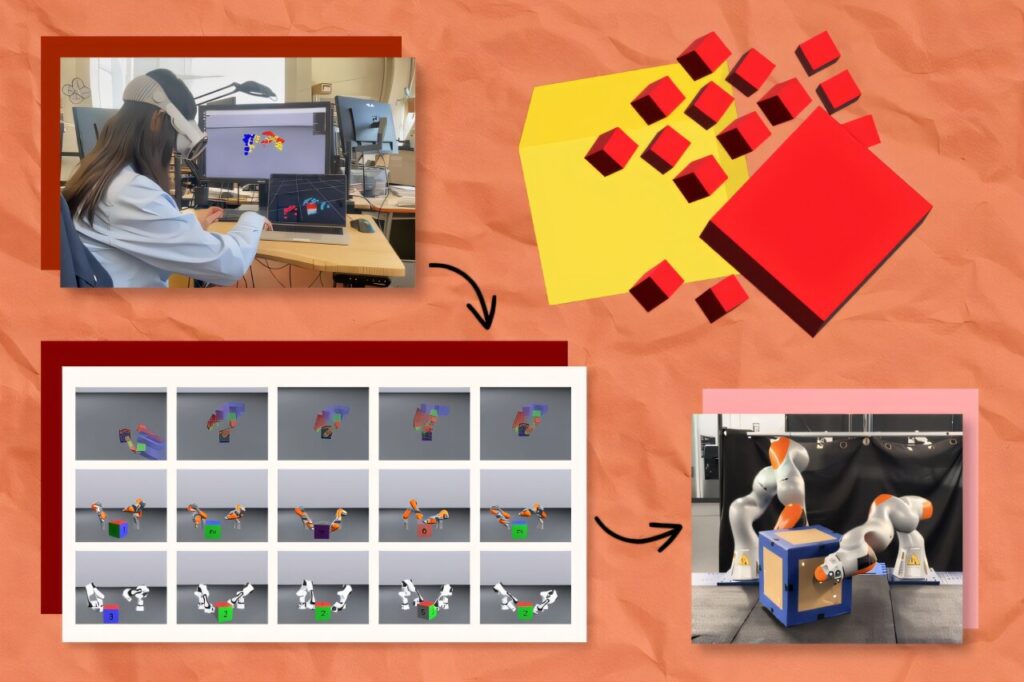

PhysicsGen can multiply dozens of virtual reality demonstrations into nearly 3,000 simulations per machine for mechanical companions such as robotic arms and hands. Credit: Alex Shipps/MIT CSAil using researcher photos

When chatgpt or gemini gives what appears to be an expert answer to your fiery question, you may not understand how much information you are relying on to give that reply. Like other common generator AI (AI) models, these chatbots rely on a backbone system called the Basic Model that trains billions or even trillions of data points.

Similarly, engineers want to build a basic model that trains different robots on new skills such as picking up, moving, and placing objects in places like homes and factories. The problem is that it is difficult to collect and transfer educational data across the robotic system. Using technologies such as Virtual Reality (VR) to operate hardware step by step to teach your system, but it can take time. Training videos from the internet is not very useful. This is because clips do not provide step-by-step, specialized taskwalkthroughs for certain robots.

A simulation-driven approach and robotics and AI lab at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) called “PhysicsGen” will customize robot training data to help robots find the most efficient movements in their tasks. The system can multiply several dozen VR demonstrations into nearly 3,000 simulations per machine. These high-quality instructions are mapped to the exact configuration of mechanical companions, such as robotic arms and hands.

PhysicsGen creates data that generalizes to specific robots and conditions through a three-stage process. First, a VR headset tracks how humans use their hands to manipulate block-like objects. These interactions are simultaneously mapped in a 3D Physics simulator to visualize key points in the hand as small spheres that reflect the gesture. For example, if you flip a toy, you’ll see a 3D shape that represents the various parts of the hand that rotates the virtual version of that object.

The pipeline remaps these points into a 3D model of a set up of a particular machine (such as a robotic arm) and moves them to the exact “joint” that twists and rotates the system. Finally, PhysicsGen uses trajectory optimization. This requires the most efficient movement to simulate and complete the task, so the robot knows the best ways to do box repositioning and more.

Each simulation is a detailed training data point that manipulates the robot with potential ways to process objects. Implemented in a policy (or action plan followed by a robot), the machine has many different ways to approach tasks, allowing you to try different movements if they don’t work.

“We create robot-specific data without re-recording professional demonstrations of each machine,” says Lujie Yang of MIT Ph.D. CSAIL Affiliate, the lead author of electrical engineering and computer science students and new papers submitted to the ARXIV preprint server that introduces the project. “We scale our data in an autonomous and efficient way, and we use task instructions to a wider range of machines.”

Generating so many instructional trajectories of robots can ultimately help engineers build large datasets to guide machines such as robotic arms and dexterous hands. For example, a pipeline might help two robot arms to pick up warehouse items and cooperate by placing them in the right boxes for delivery. The system can also lead the two robots to work together at home with tasks such as cleaning up the cups.

The possibilities of Physicsgen also extend to converting data to useful instructions for new machines, designed for older robots or different environments. “Even though they are collected for a certain type of robot, these previous datasets can be revived and made them more generally useful,” adds Yang.

Addition by multiplication

Physicsgen transformed 24 human demonstrations into thousands of simulated ones, helping both digital and real-world robotic objects.

Yang and her colleagues first tested the pipeline in a virtual experiment that required a floating robotic hand to rotate the block to the target position. The digital robot performed the task at a rate of 81% accuracy by training a large dataset of PhysicGen. This is a 60% improvement from the baseline we only learned from human demonstrations.

Researchers also found that physics can improve how virtual robot arms work together to manipulate objects. Their system created additional training data that would help two sets of robots successfully accomplish tasks of more than 30% than purely humans strong baselines.

In an experiment using a pair of real-world robotic arms, researchers observed similar improvements as machines teamed up to flip large boxes to designated locations. If the robot deviated from the intended trajectory or accidentally deleted an object, they were able to recover the task middle by referencing the alternative trajectory from the library of educational data.

Senior author Las Tedreke, Toyota professor of electrical and computer science, aerospace and mechanical engineering at MIT, adds that this mimetic guided data generation technique combines the strength of human demonstrations with the power of robotic motion planning algorithms.

“Even one demonstration from humans can make motion planning problems much easier,” says Tedrake, who is also the senior vice president of a large-scale behavioral model for the Toyota Research Institute and CSAIL’s principal investigators. “In the future, foundation models will likely be able to provide this information, and this type of data generation technique will provide a kind of post-training recipe for that model.”

The future of physics gen

Soon, Physics Gen could be expanded to a new frontier. Diversification of tasks a machine can perform.

“For example, I would like to teach robots using Physicsgen to pour water when I’m trained solely to clean up the food,” Yang says. “Our pipelines don’t just generate dynamically viable movements of familiar tasks, they could also create diverse libraries of physical interactions that could serve as building blocks to accomplish completely new tasks that humans have not demonstrated.”

MIT researchers warn that this is a somewhat distant goal, but creating a large number of widely applicable training data could ultimately help build basic models of robots. A CSAIL-led team is investigating how physics can leverage vast, unstructured resources as a simulation species. Goal: You can transform everyday visual content into rich, robot-enabled data and teach machines to perform tasks that no one would explicitly display them.

Yang and her colleagues aim to make physical Gen even more useful in the future for robots with diverse shapes and configurations. To achieve that, they plan to leverage datasets in real-world robot demonstrations to capture how the robot’s joints move rather than human.

Researchers also plan to incorporate augmented learning that AI systems learn through trial and error, and to expand the dataset beyond the examples humans provide for physics. They can augment the pipeline with advanced perceptual techniques to help robots visually recognize and interpret the environment, allowing machines to analyze and adapt to the complexity of the physical world.

For now, Physicsgen shows how AI can help teach different robots to manipulate objects within the same category, especially those within strict objects. Pipelines can quickly help robots find the best way to deal with soft items (such as fruit) and deformable items (such as clay), but their interactions are not yet easy.

Details: Physics-driven data generation for contact-rich operations by Lujie Yang et al, Traujectory Optimization, ARXIV (2025). doi:10.48550/arxiv.2502.20382

Journal Information: arxiv

Provided by Massachusetts Institute of Technology

This story has been republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and education.

Quote: New simulation system generates thousands of training examples for robot hands and arms (July 14, 2025) From July 14, 2025 https://techxplore.com/news/2025-07-Simulation Retrieved from Guinerates

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.