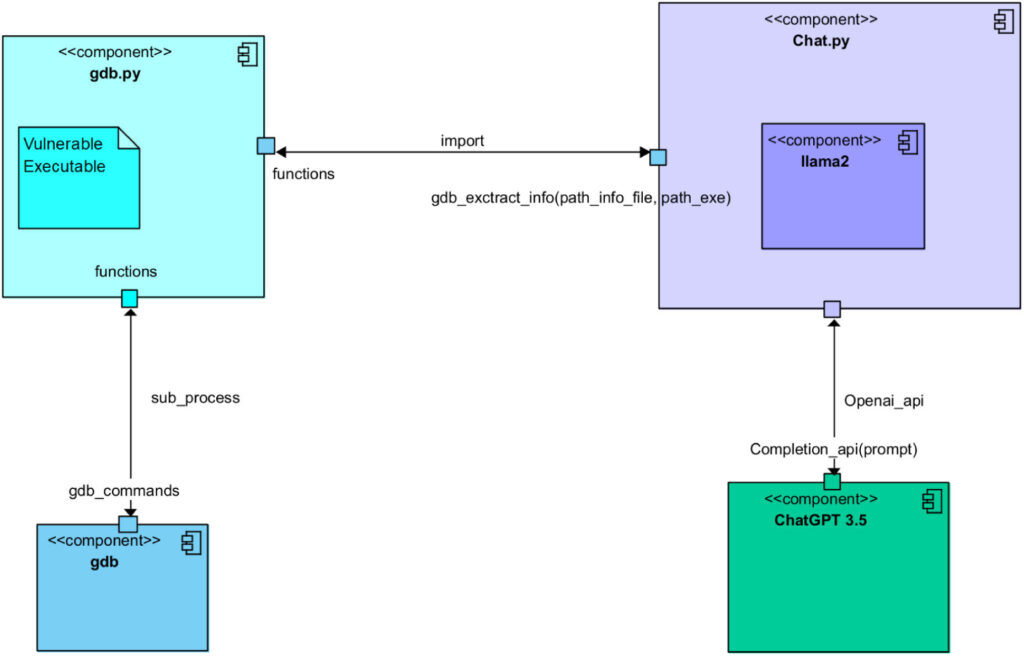

A high-level application architecture consisting of multiple interconnected modules that work together to automate vulnerability analysis and exploit generation. Credit: Caturano et al. (2025). Elsevier.

As computers and software become more refined, hackers need to quickly adapt to modern developments and devise new strategies to plan and execute cyberattacks. One common strategy to permeate malicious computer systems is known as software exploitation.

As suggested by its name, this strategy involves exploitation of software bugs, vulnerabilities, or flaws to carry out fraudulent actions. These actions include gaining access to a user’s personal account or computer, running malware or specific commands remotely, stealing or modifying the user’s data, or crashing the program or system.

Understanding how hackers devise potential exploits and plan attacks is ultimately useful in developing effective security measures against attacks. Up until now, creating exploits has been primarily possible for individuals who manage extensive programming knowledge, protocols that manage the exchange of data between devices or systems, and operating systems.

However, recent papers published on computer networks show that this may no longer be the case. Exploits can also be automatically generated by leveraging large-scale language models (LLM), such as the models underlying the well-known conversation platform ChatGPT. In fact, the authors of the paper were able to automate the generation of exploits via a carefully urged conversation between ChatGpt and Llama 2, open source LLMs developed by Meta.

“We work in the field of cybersecurity with an offensive approach,” Simon Pietro Romano, co-author of the paper, told Tech Xplore. “We were interested in understanding how far we could go by leveraging LLMS to promote penetration testing activities.”

As part of their recent research, Romano and his colleagues began a conversation aimed at generating software exploits between ChatGpt and Llama 2. By carefully designing the prompts fed to the two models, we have ensured that the model plays different roles and completes five different steps known to support the creation of the exploit.

Iterative AI-driven conversations between two LLMs lead to the generation of valid exploits of vulnerable code during an attack. Credit: Caturano et al. (2025) Elsevier.

These steps include analyzing vulnerable programs, identifying possible exploits, planning attacks based on these exploits, understanding the behavior of targeted hardware systems, and ultimately generating actual exploit code.

“Two different LLMs interacted with each other to go through all the steps involved in the process of creating a valid exploit for a vulnerable program,” explained Romano. “One of the two LLMSs is one of the ‘context’ information about a vulnerable program and its execution time configuration. After that, ask other LLMs to create a practical exploit. In short, the previous LLM is good at asking questions.

So far, researchers have tested LLM-based exploit generation methods in their first experiment. Nevertheless, they have discovered that they eventually generate fully functional code for buffer overflow exploits. This is an attack that overwrites data stored by the system to change the behavior of a particular program.

“This is a preliminary study, but it clearly demonstrates the feasibility of the approach,” Romano said. “The meaning concerns the possibility of reaching fully automated penetration testing and vulnerability assessment (VAPT).”

Recent research by Romano and his colleagues raises important questions about the risks of LLMS, as it demonstrates how hackers use them to automate the generation of exploits. In the next study, researchers plan to continue to investigate the effectiveness of the exploit generation strategies they devised to inform future developments in LLM and advances in cybersecurity measurement.

“We are currently exploring further research paths in the same application field,” Romano added. “So the natural prosecution of our research seems to be in the field of so-called “agent” approaches, minimizing human supervision. ”

Written for you by author Ingrid Fadelli, edited by Gaby Clark and fact-checked and reviewed by Andrew Zinin. This article is the result of the work of a careful human being. We will rely on readers like you to keep independent scientific journalism alive. If this report is important, consider giving (especially every month). You will get an ad-free account as a thank you.

Details: Chit chat between Llama 2 and ChatGpt for automatic exploit creation. Computer Network (2025). doi: 10.1016/j.comnet.2025.111501.

©2025 Science X Network

Quote: Conversations between LLMs can automate the creation of exploits, retrieved on July 19, 2025 from https://techxplore.com/news/2025-07.

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.