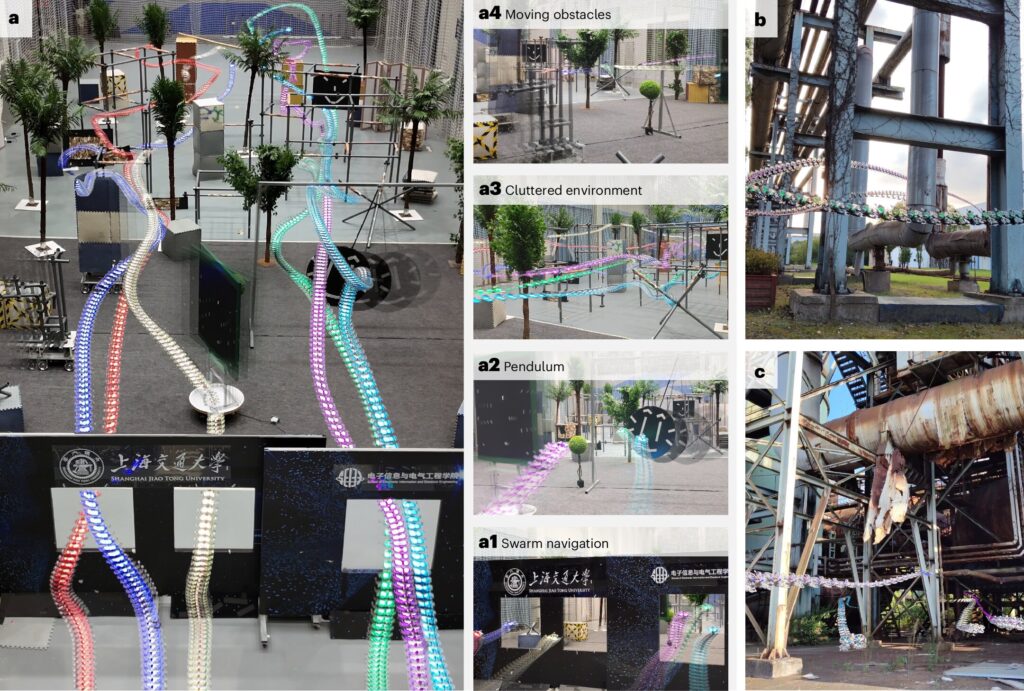

Vision-based agile swarm navigation through messy environments using end-to-end neural network controllers trained in differentiable physics. Credit: Nature Machine Intelligence (2025). doi:10.1038/s42256-025-01048-0

Unmanned aerial vehicles (UAVs), commonly known as drones, are widely used around the world to tackle a variety of real-world tasks, such as filming videos for a variety of purposes, monitoring crops and other environments from above, assessing disaster zones, and conducting military operations. Despite their extensive use, most existing drones need to be fully or partially operated by human agents.

Furthermore, many drones are unable to navigate messy, crowded, or unknown environments without colliding with nearby objects. Those that can navigate these environments typically rely on expensive or bulky components, such as advanced sensors, graphics processing units (GPUs), and wireless communication systems.

Researchers at Shanghai Ziaoton University have recently introduced a new insect-inspired approach that allows teams of drones to move at high speeds and autonomously navigate complex environments. The proposed approach introduced in a paper published in Nature Machine Intelligence relies on both deep learning algorithms and core physics principles.

“Our research was inspired by the incredible flight capabilities of small insects like flies,” Professor Danping Zou and co-author of the paper, Professor Weiyao Lin, told Tech Xplore. “With only a small brain and limited sensing, I have always surprised that these tiny creatures can do agile and intelligent manipulation by avoiding obstacles, hovering through the air, chasing prey.

“Replicating that level of flight control has long been a major challenge for dreams and robotics. It requires tightly integrated recognition, planning and control that everything is being done with very limited onboard calculations, like the brain of insects.”

The most common computational approach to controlling flights of multiple drones decomposes autonomous navigation tasks into separate modules such as state estimation, mapping, path planning, trajectory generation, and control modules. Although addressing these subtasks individually is effective, it can induce the accumulation of errors across different modules and introduce delays in drone responses. In other words, the drone may respond more slowly as it approaches an obstacle. This increases the risk of conflicts in dynamic and messy environments.

“The main purpose of our study was to investigate whether lightweight artificial neural networks (ANNs) could replace this classic pipeline with a compact, end-to-end policy,” Professor Zou and Lin said.

“This network takes sensor data as input and outputs control actions directly. This is a paradigm that reflects the way flies use a small number of neurons to generate complex and intelligent behavior. We sought to demonstrate that not only biological elegance, but sensing and computational minimalism still yields high-performance, self-supporting flights.”

The new system developed by the researchers primarily relies on a newly developed lightweight artificial neural network that can generate control commands for limb equipment aerial vehicles based on an ultra-low resolution depth map of 12×16. Although the definition of maps provided to the algorithm is low, it has been found that it is sufficient for the network to understand the surrounding environment and effectively plan the operation of the aviation vehicle.

“This network is a custom-built simulator made up of simple geometric shapes (cubes, ellipsoids, cylinders, and planes) that allowed them to generate diverse yet structured environments,” explained Professor Zou and Lynn. “Our training process is extremely efficient, thanks to a differentiable physics-based pipeline. It supports both single-agent and multi-agent training modes. In a multiagent configuration, other drones are treated as dynamic obstacles during learning.”

An important advantage of the multi-aircraft navigation approach developed by researchers is that it relies on a very compact, lightweight, deep neural network with only three convolutional layers. The researchers tested it on an embedded computing board. This is only $21 and turns out to be performed on both smooth and energy efficient runs.

“Training converges in just two hours on an RTX 4090 GPU, which is very fast for policy learning,” says Professor Zou and Lin. “Our systems naturally support multi-robot navigation without intensive planning or explicit communication, enabling scalable deployment in Swarm scenarios.”

The team’s neural network runs on a low-end development board that costs just $21. Credit: Zhang et al.

When they reviewed previous literature in this field, researchers found that many deep learning algorithms for drone navigation are not well generalized to the entire real-world scenario. This is because in many cases it does not account for unexpected obstacles or environmental changes and requires training with a large amount of flight data labeled by human experts.

“Our most important finding is that embedding physics models of limb devices directly into the training process can dramatically improve both training efficiency and real-world performance in terms of robustness and agility,” Professor Zou and Lynn said.

“This technique known as differentiable physics learning was not invented by us, but we first expanded and successfully applied it to real-world quadruped control. Through this study, we have also reached three powerful, unexpected insights.

The promising findings achieved by Professor Zou, Lin and his colleagues illustrate the potential of small artificial neural network-based models for tackling complex navigation tasks. Researchers have shown that these models may be more effective than often perceived and can help us understand how larger models work.

“Just as neuroscience has advanced early through fruit fries, just as its simple neural circuits helped unleash basic insights, the small models give us a clear view of how perception, decision-making and control are combined,” Professor Zou and Lynn said. “In our case, models with parameters less than 2 MB allowed for multi-agent adjustment without communication. Simplicity can lead to urgent intelligence.”

In particular, the lightweight models developed by the researchers worked well despite being trained in a simulated environment. This is in stark contrast to many previously developed models that require a significant amount of expert sign data.

“I learned that intelligence doesn’t have to rely on large datasets,” the researchers said. “We have fully trained our policies in simulations using internet-scale data, pre-collected logs, or hand-crafted demonstrations. We use only a few basic tasks and a geometric environment that drives a geometric physics engine.

Overall, the results of this recent study suggest that neural networks guided by fundamental physics principles can achieve better results than networks trained with millions of images, maps, or other labeled data. Furthermore, researchers have found that even low-defined depth images can accurately guide the robot’s behavior.

“Like fruit flys that manage incredible aerial feats, although their eyesight is limited to low-resolution composites, we controlled drones flying at speeds of up to 20 m/s using 12 x 16 pixel depth images,” Professor Zou and Lynn said. “This supports bold hypothesis. Navigation performance may depend more on the internal understanding of the agent’s physical world than on sensor fidelity alone.”

In the future, the approach developed by Professor Zou, Professor Lin and his colleagues could be deployed in more types of aviation vehicles and tested in certain real-world scenarios. Ultimately, for example, it will help expand the tasks that ultra-light drones can tackle, allowing them to automatically take selfies or participate in race competitions. This approach can prove useful for broadcasting sports and other events, searching for collapsed buildings during search and rescue and rescue operations, and inspecting messy warehouses.

“We are currently investigating the use of light flow instead of depth maps for fully autonomous flight,” added Professor Zou and Lin. “Optical flow provides basic movement cues and has been studied in neuroscience for a long time as an important component of insect vision.

“We hope that by using it we will further approach mimicking the natural strategies that insects use for navigation. Another important direction we are pursuing is the interpretability of end-to-end learning systems.”

The team’s lightweight neural networks have been found to work very well in real-world experiments, but how these promising results work is not yet fully understood. As part of the next research, Professor Elephant and Professor Lynn want to shed more light on the internal representation of the network.

Written for you by author Ingrid Fadelli, edited by Gaby Clark and fact-checked and reviewed by Andrew Zinin. This article is the result of the work of a careful human being. We will rely on readers like you to keep independent scientific journalism alive. If this report is important, consider giving (especially every month). You will get an ad-free account as a thank you.

Details: Vision-based agile flight learning with differentiable physics. Nature Machine Intelligence (2025). doi: 10.1038/s42256-025-01048-0.

©2025 Science X Network

Quote: A new approach allows drones to autonomously navigate high-speed, complex environments, obtained from July 21, 2025, from https://techxplore.com/2025-07.

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.