Credit: Arxiv (2025). doi:10.48550/arxiv.2503.02854

Let’s say you’re reading a story or playing a game of chess. You may not have noticed, but at each stage of the road, your mind tracked how the situation (or “state of the world”) was changing. You can imagine this as a kind of event list. This is used to update your predictions of what will happen next.

Language models like ChatGPT track changes within your “mind” when you exit a code block or predict what you’ll write next. They usually use transformers to make educated guesses – internal architectures that help models understand sequential data – but systems are sometimes wrong due to flawed thinking patterns.

Identifying and adjusting these underlying mechanisms makes the linguistic model more reliable prognostic addiction, particularly when using more dynamic tasks such as predicted weather and financial markets.

But are these AI systems the process of developing situations like we do? A new paper submitted to the ARXIV Preprint Server by researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) shows the departments of electrical engineering and computer science. Instead, it shows that the model uses clever mathematical shortcuts between each progressive step, ultimately making reasonable predictions.

The team made this observation by moving under the hood of the language model and assessed how closely they could track objects that quickly change positions. Their findings show that engineers can control when using specific workarounds as a way to improve the system’s prediction capabilities.

Shell Game

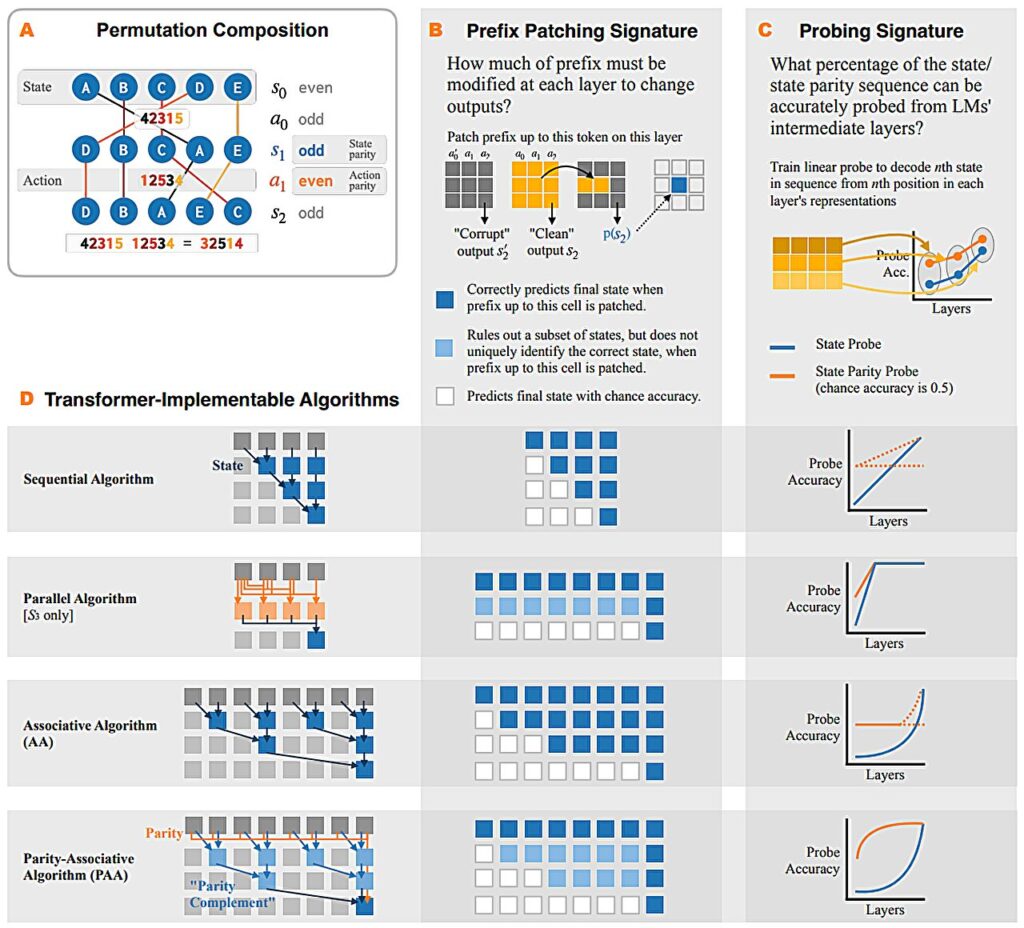

Researchers analyzed the internal mechanics of these models using clever experiments reminiscent of classical intensity games. After the object was placed under the cup and shuffled in the same container, did you have to guess what the final location of the object is? The team used similar tests, and the model inferred the final arrangement of a particular number (also known as permutations). The model was given a starting sequence, such as “42135”, and was given instructions on when and where to move each digit, such as moving “4” to the third position without knowing the final result and then moving thereafter.

In these experiments, we gradually learned that trans-based models predict the correct final arrangement. However, instead of shuffling numbers based on instructions given to them, the system aggregated the information between consecutive states (or individual steps in the sequence) and calculated the final permutation.

One go-to pattern observed by a team called “Associative Algorithm” is essentially organizing nearby steps into groups and calculating the final guess. This process can be thought of as being structured like a tree where the initial numerical arrangement is “root”. As you move the tree up, adjacent steps are grouped into different branches and multiplied. At the top of the tree is the final combination of numbers, calculated by multiplying each result sequence on the branch.

The language model speculated that the final permutation was to use a crafty mechanism called “parity-related algorithms.” Determines whether the final arrangement is the result of equal or odd rearrangements of individual numbers. The mechanism then groups adjacent sequences from different steps before multiplying them, just like the associated algorithms.

“These behaviors show that the transformer performs simulations through associative scans. Instead of changing the state changes step by step, the model organizes them into hierarchies,” says MIT Ph.D. Student and CSAIL affiliate Belinda Li SM ’23, lead author of the paper.

“How do you encourage trances to learn better tracking states? Instead of imposing these systems to form inferences about data in a human-like way, they should probably meet the approaches they naturally use when tracking changes in state.”

“One of the research was to extend test time computing along the depth dimension, not the token dimension, by increasing the number of transformer layers, rather than the token dimension, by increasing the number of chain tokens that were considered during test time inference,” adds Li. “Our work suggests that this approach will allow transformers to build deeper reasoning trees.”

Through the visual glass

Li and her co-authors observed how association and parity-related algorithms worked, using tools that allow us to peer inside the “mind” of language models.

They first used a method called “probing.” This shows what information flows through the AI system. Imagine examining the brain of a model and seeing the idea at a particular moment. In a similar way, this technique maps the system’s medium-term experimental predictions for the final number arrangement.

Next, we used a tool called “activation patching” to show where the language model changes into situations. It involves injecting false information into certain parts of the network, interfering with some of the “ideas” of the system and keeping the others constant, and seeing how the system adjusts its predictions.

These tools became apparent when algorithms generate errors and when the system “understands” how to correctly infer the final permutation. They observed that associative algorithms learn faster than parity-related algorithms and perform better even in long sequences. Li is overreliant on heuristics (or rules that allow for quick calculation of reasonable solutions) to predict permutations, resulting in more elaborate instructions for the latter difficulties.

“We found that when language models use heuristics early in training, they incorporate these tricks into the mechanism,” says Li. “However, these models tend to generalize worse than heuristic-independent models. Since certain pre-training goals can block or encourage these patterns, we may consider designing techniques that discourage models from picking up bad habits in the future.”

Researchers note that their experiments were performed on small-scale language models that are fine-tuned with synthetic data, but found that model size had little effect on the results. This suggests that larger language models, such as GPT 4.1, are likely to produce similar results. The team plans to test language models of different sizes that have not been fine-tuned and examine hypotheses more closely by assessing their performance in dynamic real-world tasks such as code tracking and story evolution.

Postdoctoral Keon Vafa of Harvard University was not involved in the paper, but said the researchers’ findings could create opportunities to advance language models. “Using large-scale language models relies on tracking states, from providing recipes to writing code to tracking conversation details,” he says.

“This paper makes great progress in understanding how language models perform these tasks. This progress provides interesting insights into what language models are doing and promises new strategies to improve them.”

More details: Belinda Z. Li et al, (how) does the language model track state? , Arxiv (2025). doi:10.48550/arxiv.2503.02854

Journal Information: arxiv

Provided by Massachusetts Institute of Technology

This story has been republished courtesy of MIT News (web.mit.edu/newsoffice/), a popular site that covers news about MIT research, innovation and education.

Quote: AI’ Examining Thoughts reveals that models use tree-like mathematics to track shift information (July 21, 2025) Retrieved from July 22, 2025 https://techxplore.com/news/2025-07-probing-ai-thoughts-reveals-ree.html

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.