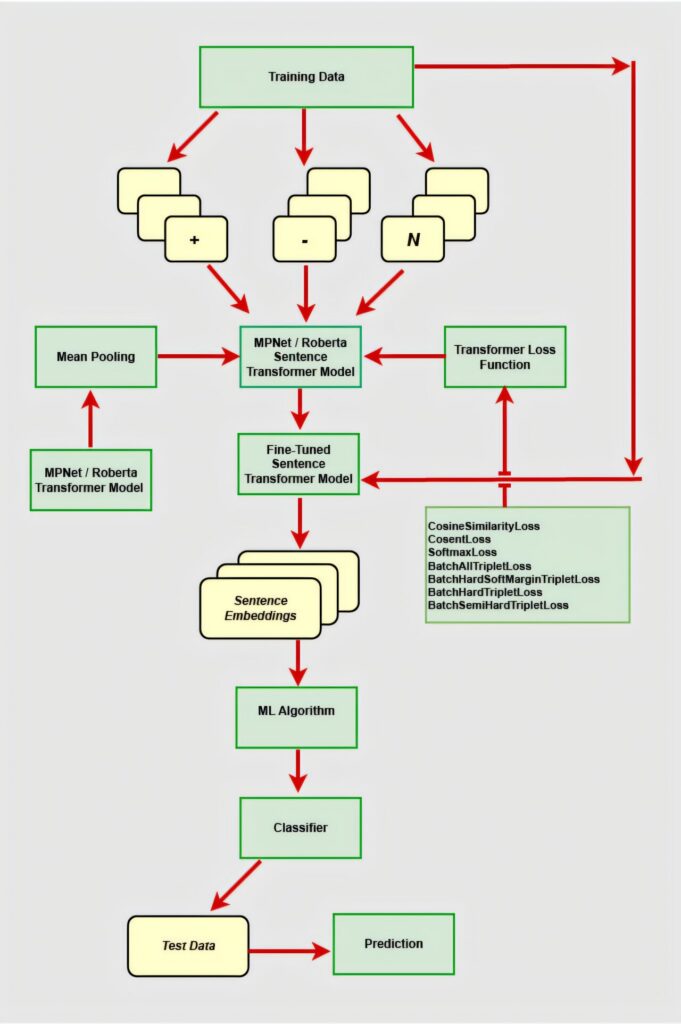

Schematic diagram of a hybrid sentiment analysis pipeline: The trans encoder generates fixed long embeddings that feed the machine learning classifier. Credit: Procedia Computer Science (2025). doi:10.1016/j.procs.2025.03.161

Artificial intelligence is accelerating at a fierce speed, with larger models dominating the scene. This is more parameters, more data, more power. But here’s the real question: do we really need to be bigger? We challenged that assumption by asking another question. How can an organization mine real-time customer sentiment without renting a GPU farm? While every tweet and review has practical insights, running a large language model on each input involves sudden computational and financial costs.

A recent study published in Procedia Computer Science asked whether Leaner architectures can provide comparable accuracy, reducing training and inference time. We paired with fine-tuned statement converters and lightweight classifiers to not only match and exceed the performance of large language models, but we found it to run comfortably on commodity hardware. This approach could reconstruct the economics of sentiment analysis.

Our approach

We have built a pipeline on MPNet and Roberta-Large, the backbone of two powerful and efficient transformers. First, we convert each input statement into a fixed-length vector by averaging pooling to the token embedding. This translates the text into a semantically rich representation without the overhead of processing per token. Next, we directly tweak these sentence converters with labeled sentiment data.

Applying the monitored loss functions, CosinIsimilarity, and Cosent, we will remove the boundaries of the same sentiment statement pair, Softmaxloss pair, class, and align the triplet loss variants (Batchall, Batchhard, Softmargin, Semihard), embedding the embedding space and expanding its positive and negative test rooms to separate different emotions.

With a tweaked embedding in hand, we treat sentiment classification as a classic machine learning problem. Send the vector to the mature classifiers: XGBoost (extreme gradient boost), LightGBM (light gradient boost machine), and SVM (support vector machine).

By isolating severe transformer fine-tuning from fast, tabular classification, it minimizes end-to-end training time and reduces run-time memory requirements. The modular design allows researchers to swap the core embedded pipeline into a priority classifier without having to redesign.

Benchmark performance

We evaluated it on four public datasets to validate the pipeline. The three-class Twitter US Airline Sentiment Dataset (TAS) has an increased 88.4% accuracy and a minority class recall for the Roberta-Large, with its best models, Cosininimilarity fine-tuning and XGBoost. In a balanced IMDB movie review set, the same transformer-loss function configuration and SVM hits 95.9%. Generalization was then tested without additional tuning. The TAS-trained model achieved 88.5% in Apple tweets, while the IMDB training model achieved 94.8% in Yelp reviews.

Comparison with large language models

We benchmarked our approach to the Metalama-3-8B with both zero shot and Qlora (quantized low rank adapter). In zero shot mode, the Llama-3-8B managed only about 50% accuracy (essentially random guess) for both tasks. Qlora fine-tuned Metalama-3-8B achieved 85.9% accuracy on the airline test set and 97.1% on IMDB.

The Llama-3-8B reduced the model by 1.2 points with IMDB, but it required nearly three hours of inference to handle the 7 hours of GPU time and test set on the NVIDIA A100. In contrast, our complete pipeline trained end-to-end in less than 4 hours, completing inferences with minutes on the same hardware configuration. In the airline task, he not only did he outperform the Llama-3-8B by 2.5 points, but he also trained 11 times faster.

These results highlight that carefully tuned sentence transformers and lightweight classifiers are comparable to LLMs with a small percentage of calculation and financial costs.

Democratization of AI

By limiting expensive calculations to a single fine-tuning step and leveraging mature ML (machine learning) libraries for classification, it enables researchers and developers with limited hardware to deploy cutting-edge sentiment analysis. Whether you are expanding customer feedback analytics or building a light NLP system for real deployment, this study shows how open source tools and clever engineering can democratize sentiment analysis using artificial intelligence.

Better yet, the system naturally balances minority classes and incorporates a direct solution for distorted emotional distribution to provide reliable, scalable performance in real-world settings. Open source repository makes replication and domain adaptation easier.

Conclusion

High-performance sentiment analysis does not have to be an exclusive state for large-scale language models. The targeted fine-tuning of sentence transformers and the wise use of lightweight classifiers have unlocked the fast, accurate, generalizable pipelines that run on everyday hardware. Going forward, we will extend this framework to topic modeling and topic summary, providing concise, real-time insights into new customer concerns, as well as sentiment scores.

So, what does this mean for the future of AI? Whether it’s customer insights, real-time moderation, or ethical AI, a smarter and more efficient model that allows you to access high-performance sentiment analysis.

This story is part of the Science X dialogue, allowing researchers to report findings from published research articles. Please see this page for the Science X dialogue and how to participate.

Details: Agni Siddhanta et al, Sentiment Showdown -Sente Cente Transformers stands in terms of language models. doi:10.1016/j.procs.2025.03.161

Agni Siddhanta is a well-known data scientist specializing in machine learning and AI. He holds an MS in Analytics from Georgia State University, USA. Agni has played a key role in organizations such as LexisNexis Risk Solutions and Mott MacDonald. His recent research on fine-tuned sentence transformers for sentiment classification has been published in Procedia Computer Science after accepting at the 3rd International Workshop on Human-centered Innovation and Computational Intelligence 2025, and demonstrates his talent for combining cutting-edge technology with human-centered insights. Agni also contributed to the academic community as a reviewer of the Heal Workshop at Neurips 2024 and Chi 2025, and as a technical committee member of the 18th International Conference on Advanced Computer Theory and Engineering (ICACTE 2025). His industry leadership and academic engagement underscores his commitment to advance research into AI and deploying scalable and accessible solutions.

Citation: AI-powered sentiment analysis (July 21, 2025) Democratization (July 21, 2025) Retrieved from https://techxplore.com/news/2025-07-democratizing-ai-powered-sentiment-anasys.html

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.