Credit: University of California – San Diego

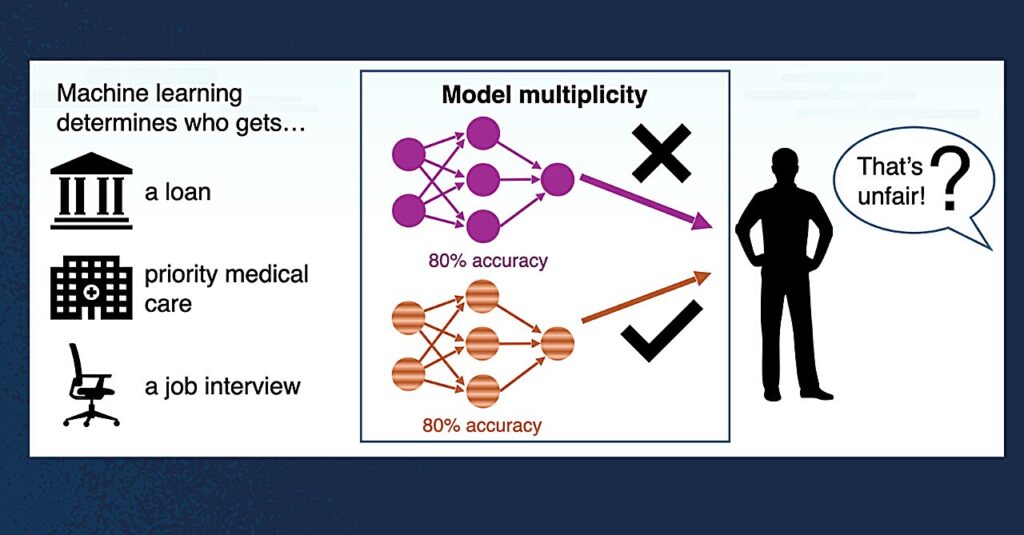

Machine learning is an integral part of high-stakes decision-making in a wide range of human-computer interactions. You apply for a job. Submit a loan application. The algorithm determines who advances and who is rejected.

Computer scientists at the University of California, San Diego and the University of Wisconsin – Madison challenges the common practice of using a single machine learning (ML) model to make such important decisions. They asked how people feel when “equally good” ML models reached various conclusions.

Associate Professor Loris D’Antoni, Faculty of Computer Science and Engineering, Jacobs Faculty of Engineering, led a recently published study at the Conference on Human Factors of Computing Systems (CHI 2025). The paper, “Recognizing the Impact of Multiplicity Equity in Machine Learning,” began with fellow researchers during his tenure at the University of Wisconsin and continues today at UC San Diego. It can be used with ARXIV preprint server.

D’Antoni worked with team members to build existing evidence that different models, such as human counterparts, have a variety of outcomes. In other words, one good model may reject the application, while another model will approve it. Naturally, this leads to questions about how objective decisions can be reached.

“ML researchers assume that current practices pose a risk of fairness. Our study delved deeper into this issue. We asked Ray stakeholders, or ordinary people, how should we make decisions if multiple, very accurate models give different predictions for a particular input,” says D’Antoni.

This study uncovered several important findings. First, stakeholders balked standard practices that rely on a single model, especially if multiple models disagree. Second, participants rejected the concept that decisions should be randomised in such cases.

“We find these results interesting because these results contrast with philosophical studies of standard practices and fair practices in ML development,” said Anna Meyer, a student advised in the fall as an assistant professor at Carlton University, advised in the fall.

The team hopes that these insights will guide future model development and policies. Key recommendations include extending search across different models and implementing human decision-making to argue for discrepancies.

Other members of the research team include AWS Albarghouthi, an associate professor of computer science at the University of Wisconsin, and Yea-Seul Kim from Apple.

More info: Anna P. Meyer et al., Recognizing the impact of multiplicity fairness in machine learning, Arxiv (2024). doi:10.48550/arxiv.2409.12332

Journal Information: arxiv

Provided by the University of California – San Diego

Citation: Do machine learning models make fair decisions when interests are high? (July 17, 2025) Retrieved from July 18, 2025 https://techxplore.com/news/2025-07-takes-high-machine-fair-decisions.html

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.