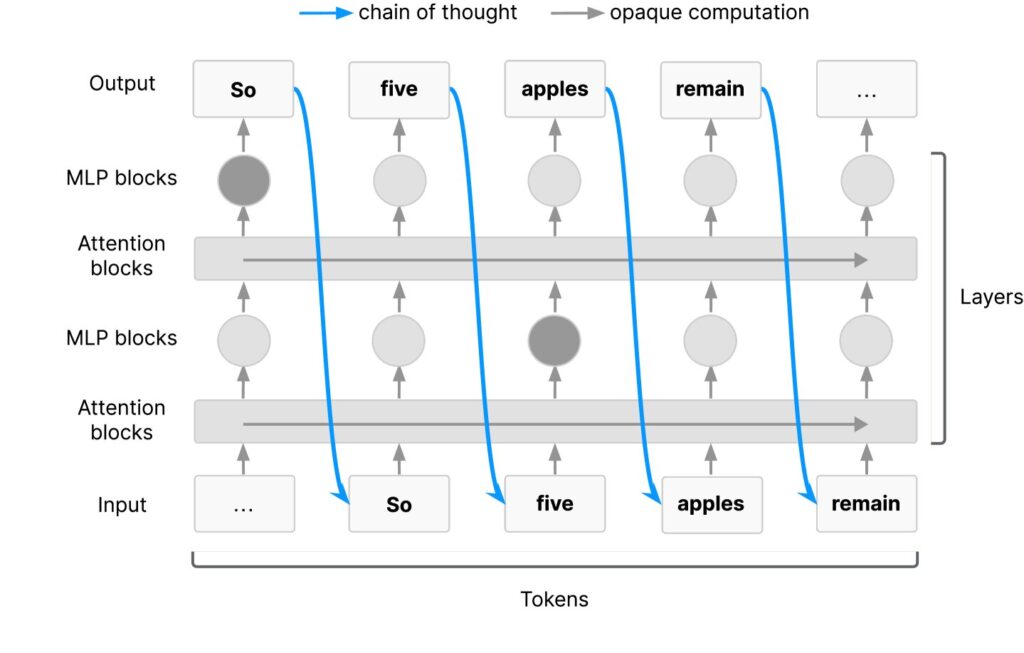

A long-cognitive serial chain must go through a chain of thought. Credit: Arxiv (2025). doi:10.48550/arxiv.2507.11473

Artificial intelligence is moving forward at a dizzying speed. Like many new technologies, it offers great benefits, but also poses safety risks. It is calling for more to be done to monitor the “thinking” of AI systems, recognizing the potential dangers that lead researchers in Google Deepmind, Openai, Meta, Anthropic, and coalitions of businesses and nonprofits.

In a joint paper published earlier this week, scientists approved by prominent industry figures including Jeffrey Hinton (who is widely regarded as the “AI Godfather”) and Openai co-founder Ilya Sutskever argue that a short window to monitor AI reasoning could close quickly.

Improved AI monitoring

They want more monitoring of the Chain of Thinking (COTS), a method that allows AI models to solve complex problems by breaking them down into smaller steps, just as humans work through complex tasks such as mindset problems.

COTS is a key feature of advanced AI models, such as DeepSeek R1 and the Language Learning Model (LLMS). However, as AI systems become more sophisticated, interpreting decision-making processes becomes even more difficult. This is a concern as existing AI surveillance methods are incomplete and can lead to misconduct being overlooked.

In the paper, scientists highlight how COT monitoring proves its value by detecting examples of AI fraud, such as when models behave in an inconsistent manner by exploiting flaws in reward function during training.

Scientists believe that better monitoring of COT could become a valuable way to control AI agents as AI agents become more capable.

“Chain thinking monitoring presents valuable additions to frontier AI safety measures and provides rare glimpses of how AI agents make decisions,” the researchers say. “Even so, there is no guarantee that the current degree of visibility will last. We encourage the research community and frontier AI developers to study how to make the most of COT monitorability and store it.”

One important demand from researchers is that AI developers will research what makes Cots monitoring possible. In other words, how do we understand how an AI model arrives at the answer? We also hope that developers will study how COT monitorability can be included as a safety measure.

The Joint Paper presents a rare moment of unity among the fiercely competitive tech giants, highlighting how much they are interested in safety. As AI systems become more powerful and integrated into society, they ensure that their safety has become more important or urgent than ever before.

Written by author Paul Arnold, edited by Gaby Clark, fact-checked and reviewed by Andrew Zinin. This article is the result of the work of a careful human being. We will rely on readers like you to keep independent scientific journalism alive. If this report is important, consider giving (especially every month). You will get an ad-free account as a thank you.

More information: Tomek Korbak et al, Chain of Thound Monitorability: A New Vulnerable Opportunities for AI Safety, Arxiv (2025). doi:10.48550/arxiv.2507.11473

Journal Information: arxiv

©2025 Science X Network

Quote: Tech Giants Warn Monitor that Windows Ai Teasoning is finished. Actions obtained from https://techxplore.com/news/2025-07-07-tech-giants-window-ai-urge.html (July 17, 2025)

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.