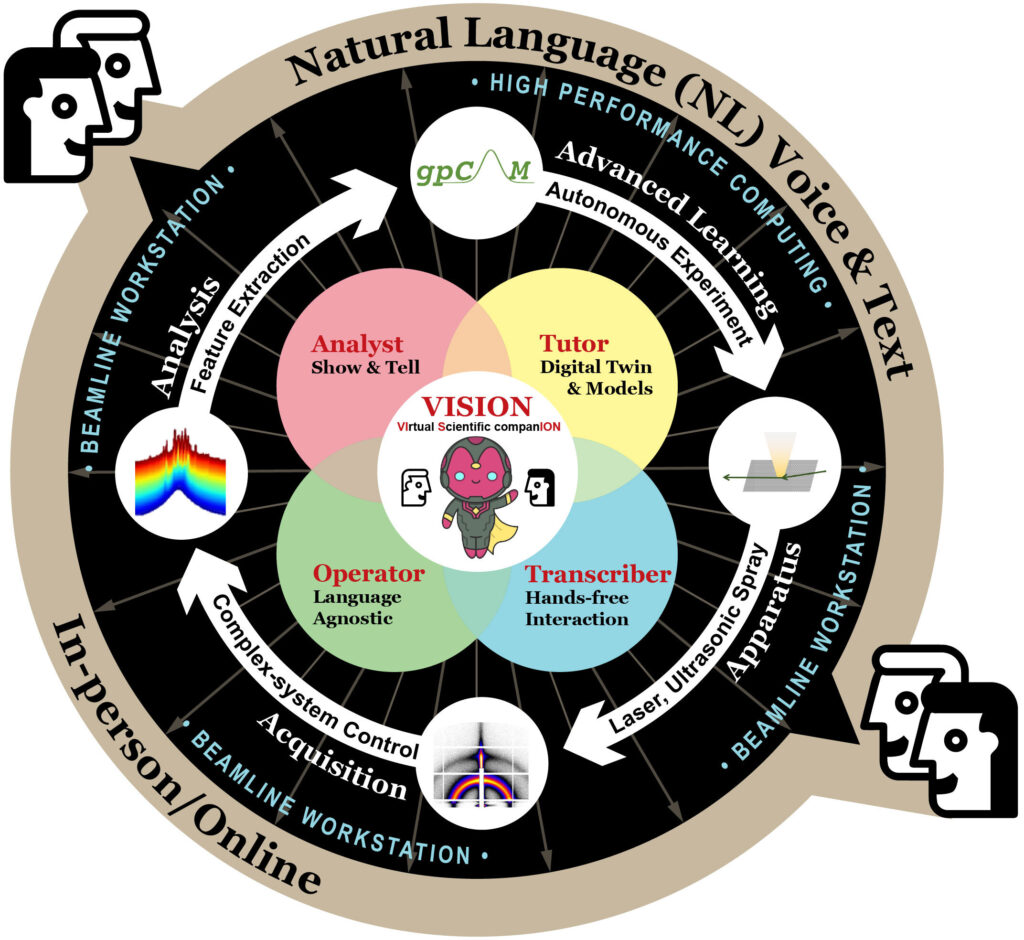

Vision aims to lead the scientific expedition of natural language control with collaborative human powers to accelerate scientific discovery at user facilities. Credit: Brookhaven National Institute

A team of scientists at the US Department of Energy (DOE) Brookhaven National Laboratory dreamed, developed and tested a new voice-controlled artificial intelligence (AI) assistant designed to break down everyday barriers for busy scientists.

The Generation AI Tool, known as Virtual Scientific Parliament, or Vision, was developed by researchers at the Lab’s Center for Functional Nanomaterials (CFN) and has been supported by experts from the National Synchrotron Light Source II (NSLS-II).

The idea is that users must speak their vision in simple language and simply communicate what they want to do with the instrument, and the AI companion tailored to the instrument takes on the task, whether they run experiments, start data analysis, or visualize the results. The Brookhaven team recently shared details about their vision in a paper published in Machine Learning: Science and Technology.

“We’re really excited about how AI can affect science, and that’s something we definitely should explore as a scientific community,” said Esther Tsai, scientist at CFN’s AI Accelerated Nanoscience Group. “What we cannot deny is that great scientists spend a lot of their time doing their everyday work. Vision serves as assistants where scientists and users can speak to answer basic questions about the capabilities and operation of the equipment.”

Vision highlights the close partnership between CFN and NSLS-II, two DOE science user facilities in Brookhaven Lab. Together they collaborate on setup, scientific planning, and data analysis from experiments on three NSLS-II beamlines, with a highly specialized measurement tool that allows researchers to explore the structure of materials using x-ray beams.

Inspired by reducing the bottlenecks associated with using NSLS-II beamlines, Tsai won the DOE Early Career Award in 2023 and developed this new concept. TSAI is currently working with NSLS-II beamline scientists to launch and test the system on a complex material scattering (CMS) beamline at NSLS-II, demonstrating the first speech control experiment at the X-ray scattering beamline and the first speech control experiment in progress towards the world of AI-Ai-Aigmented Discimage.

“At Brookhaven Lab, we are leading the research into this frontier’s scientific virtual companion concept, we are also deploying this AI technique on the experimental floor of the NSLS-II, and investigating how it can be useful to our users,” says Tsai.

Talk to AI for flexible workflows

Vision leverages the growth capabilities of large-scale language models (LLMS), a technology that is at the heart of popular AI assistants such as ChatGPT.

LLM is a vast program that creates texts modeled after natural human languages. Vision utilizes this concept not only to generate text to answer questions, but also to generate decisions about what to do and to generate computer code that drives the equipment. Internally, the vision is organized into multiple “cognitive blocks” or COGSs, each containing an LLM that handles a particular task. Multiple cogs can be combined to form a capable assistant, and COGs are working transparently for scientists.

“The user can go to the beamline and say, “I want to select a specific detector,” or “I want to make a measurement every minute for 5 seconds,” or “I want to raise the temperature.”

Examples of natural language input, whether it’s speech, text, or both, are first fed to the vision’s “classifier” COG. The classifier routes to the right COG of the task, such as an “operator” COG for device control or an “analyst” cog for data analysis.

Then, in just a few seconds, the system converts the input into code, which returns to the beamline workstation, where the user can review it before running. In the backend, everything runs on a CFN server “HAL” optimized for running AI workloads on graphics processing units.

The use of natural language in vision, the way people usually speak, is an important advantage. The system is tailored to the equipment researchers are using, freeing them from spending time setting up software parameters and instead focusing on the science they are pursuing.

“Vision serves as a bridge between users and instruments. Users can talk to the system, and the system responds to driving experiments,” said co-author Noah van der Wurten, who helped develop the code generation capabilities and testing framework for the vision. “I can imagine making this experiment more efficient and giving people more time to focus on science rather than becoming an expert on the software for each instrument.”

Team members pointed out that the ability to speak to Vision, as well as Type A prompt, can make the workflow even faster.

In a world where AI continues to evolve rapidly, Vision’s creators have also set up science tools that can keep up with technology improvements, incorporate new equipment features, and create science tools that can seamlessly navigate multiple tasks when needed.

“The key guiding is that we wanted it to be modular and adaptable. So as new AI models become more powerful, we can quickly replace or replace new AI models,” says Shray Mathur, the first author of the paper that worked on Vision’s audio understanding capabilities and overall architecture. “As the underlying model gets better, the vision gets better. It’s very exciting because it’s tackling some of the latest technologies and being able to deploy them right away. We’re building systems that really benefit our users through research.”

The work is based on the history of AI and machine learning (ML) tools developed by CFN and NSLS-II, and will assist facility scientists in autonomous experiments, data analysis, and robotics. Visions in future versions could serve as a natural interface for these advanced AI/ML tools.

The team behind the vision of the CMS beamline. From left to right, co-authors and functional nanomaterials scientists Shray Mathur, Noah van Der Vleuten, Kevin Yager, and Esther Tsai. Credit: Kevin Coughlin/Brookhaven National Laboratory

Vision of human partnerships

As the vision architecture is developed and there is an active demonstration at the CMS beamline, the team is aiming to further test it with beamline scientists and users, ultimately bringing virtual AI companions to the additional beamline.

In this way, the team can actually talk to users about what really helps them, Tsai said.

“The CFN/NSLS-II collaboration is very unique in the sense that it is working with frontline support users to collaborate with this frontier AI development using the language model on the experimental floor,” Tsai said. “We’re getting feedback to help our users better understand what they need and how they can support them.”

Tsai thanked CMS’ lead beamline scientist Ruipeng Li for his support and openness to the ideas of the vision.

The CMS beamline is already a test site for AI/ML functions, including autonomous experiments. When the idea of bringing a vision to the beamline came into view, Lee saw it as an exciting and enjoyable opportunity.

“Since the beamline was built over eight years ago, we have been close collaborators and partners,” Li said. “These concepts allow us to build on the possibilities of beamlines and continue to push the boundaries of AI/ML applications for science. As we are currently riding on AI Wave, we want to see how we can learn from this process.”

In the overall picture of Ai-Augmented Scientific Research, vision development is a step towards realizing other AI concepts across the DOE complex, including the skin of science.

Kevin Yager, AI AI-Accelerated Nanoscience Group leader and co-authors of CFN and Vision, envisions the skin as an extension of the human brain that researchers can interact through conversation to generate inspiration and imagination for scientific discovery.

“Imagine the future of science and we see an ecosystem of AI agents working on coordination to help advance research,” Yager says. “Vision Systems are an early example of this future. It is an AI assistant that helps you operate your equipment. We want to build more AI assistants and connect them to a very strong network.”

Details: Shray Mathur et al, Vision: Modular AI Assistant of Natural Human-Document Interaction in Science User Facilities, Machine Learning: Science and Technology (2025). doi:10.1088/2632-2153/add9e4

Provided by Brookhaven National Laboratory

Quote: Interactive Virtual Companion Accelerating Discoveries at Scientific User Facilities (June 26, 2025) Retrieved from https://techxplore.com/news/2025-06-interactive-companion-discoveries-scientific.html

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.