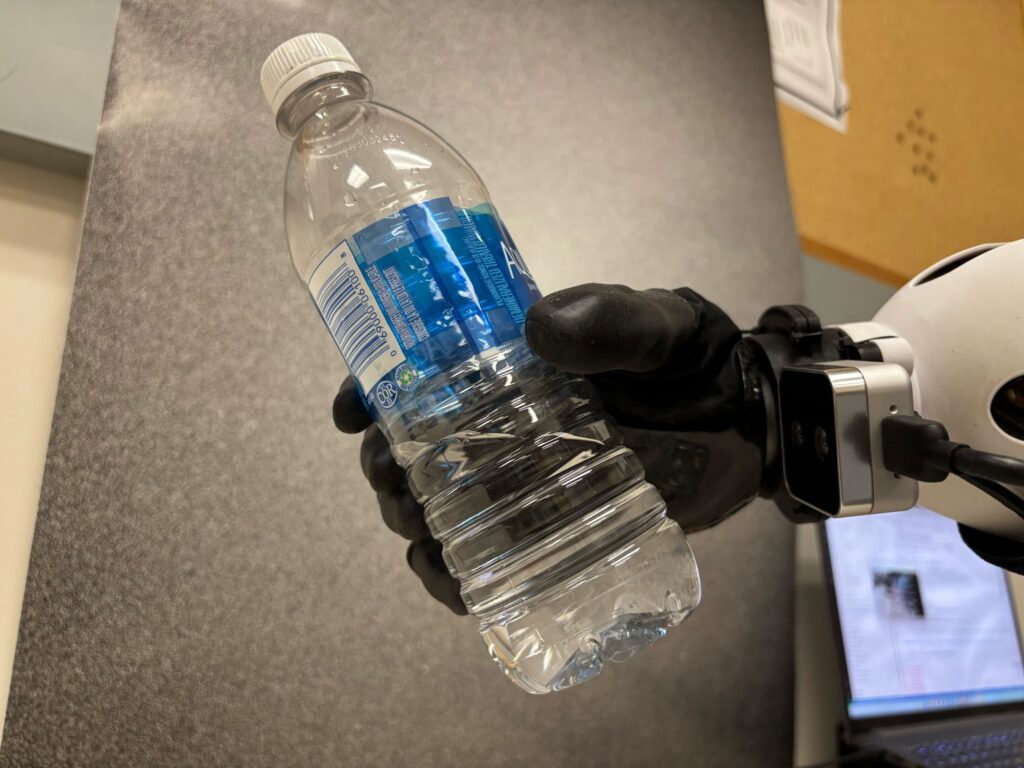

Autonomous prosthetic hands grab the bottle using vision and touch sensors. Credit: Kaijie Shi of Xianta Jiang’s Lab, Memorial University.

Loss of limbs following an injury, accident, or illness can significantly reduce the quality of life, making it difficult for people to engage in daily activities. However, recent technological advances have opened up new and exciting possibilities for the development of more comfortable, smarter, and intuitive prosthetic limbs.

Many wise prosthetics developed over the past decade operate through myoelectric signals. This is an electrical signal derived from muscles picked up by sensors attached to the wearer’s skin. While some of these systems have proven to be extremely effective, users need to consciously generate certain muscle signals to perform desirable movements that may be physically and mentally demanding.

Researchers at Newfoundland Memorial University in Canada recently developed a new automated method for controlling the movement of prosthetic limbs that are independent of myoelectricity and other biological signals. The proposed control system, outlined in a paper submitted to the preprint server Arxiv, is based on a machine learning-based model trained with video footage of a prosthetic limb that completes a particular task, allowing autonomous planning and execution of the movements needed to tackle a particular task.

“The idea for this paper came from our desire to make the hands of the prosthetic hand easier to use,” Xianta Jiang, senior author of The Paper, told Tech Xplore. “Traditional systems rely on muscle signals that are difficult for users to control and tire of. We wanted to investigate whether autonomous systems could take over some of their efforts, such as robots that can “see” and “feel” the world. ”

The main purpose of recent research by Jiang and his colleagues was to create prosthetic legs that can autonomously handle and perform tasks of grasping the surrounding environment. Instead of planning movements based on biological signals or commands sent by the user, the control systems created by the researchers rely on data collected by a small camera attached to the prosthetic wrist, as well as sensors that detect both touch and movement.

“These inputs are combined using artificial intelligence (AI), particularly a learning technique called Imitation Learning,” explained Kaijie Shi, the first author of The Paper. “AI models learn from past demonstrations. They are basically watching how objects are picked up, held and released. The hands use this knowledge to make decisions in real time. What’s unique is that the system is independent of muscle signals. It works by “understanding” objects and tasks, making them more natural and intuitive to the user. ”

To test the newly developed control system, researchers deployed it in a real prosthesis and performed a series of experiments in a real environment. They discovered that their system allowed the prosthetics to successfully grasp the desired item, even when trained with just a few videos showing the same person handling a limited set of objects.

“Our system has performed the task of autonomously grasping and releasing with over 95% of its success,” jiang said. “This is the main step in creating a prosthetic hand that works automatically and reliably in everyday settings. In reality, this means that future prosthetic users can benefit from devices that help them complete common tasks, such as picking up cups or opening doors, so they need to constantly think about any movement, such as picking up cups or opening doors.”

Researchers plan to continue to improve the mimetic learning-based approach they have developed, and test it in a broader range of experiments involving individuals who will benefit from a more advanced prosthetic system. In the future, they hope that their systems will contribute to the advancement of commercially available prosthetic hand hands and reduce the effort required to operate them.

“We then want to test the system with real prosthetic users and gather feedback from them,” Jiang added. “We also plan to improve the system’s ability to adapt to a variety of environments and more complex tasks, such as the processing of softly shaped objects. Another goal is to explore how this technology can be used in other aidal devices such as the exo-skeleton for stroke recovery.”

Author Ingrid Fadelli writes for you, edited by Lisa Lock and fact-checked and reviewed by Robert Egan. This article is the result of the work of a careful human being. We will rely on readers like you to keep independent scientific journalism alive. If this report is important, consider giving (especially every month). You will get an ad-free account as a thank you.

More information: Kaijie Shi et al, Imation Learning, Towards autonomous prosthetic control without biosignals by Arxiv (2025). doi:10.48550/arxiv.2506.08795

Project Page: sites.google.com/view/autonomous-prusthetichd

Journal Information: arxiv

©2025 Science X Network

Citation: The new system ensures control of prosthetic movements without relying on biological signals (2025, June 18) obtained from https://news/2025-06 from June 24, 2025.

This document is subject to copyright. Apart from fair transactions for private research or research purposes, there is no part that is reproduced without written permission. Content is provided with information only.